Live Sound Mixing Rapidshare Download Free Software

For this eNTERFACE’17 workhop, we are looking for participants who want to learn and explore how to employ the in their creative projects, integrating sensing technologies, digital signal processing (DSP), interactive machine learning (IML) for embodied interaction and audiovisual synthesis. It is helpful to think of RAPID-MIX-style projects as combining sensor inputs (LeapMotion, IMU, Kinect, BITalino, etc.) and media outputs with an intermediate layer of software logic, often including machine learning. Participants will gain practical experience with elements of the toolkit and with general concepts in ML, DSP and sensor-based interaction. We are adopting Design Sprints, an Agile UX approach, to deliver our workshops at eNTERFACE’17. This will be mutually beneficial both to the participants—who will learn how to use the RAPID-MIX API in creative projects—and for us to learn about their experiences and improve the toolkit.

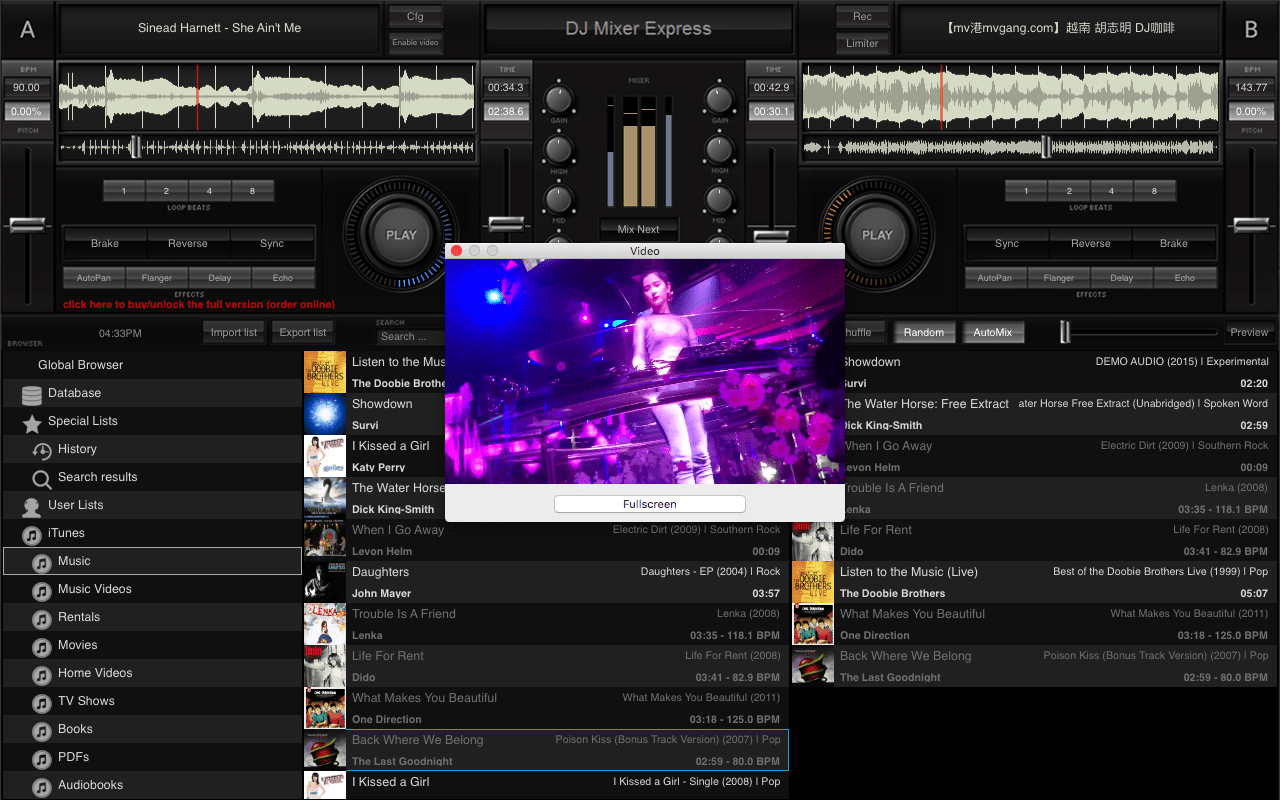

For mixing I selected a folder of my own finished electronic music. You can download them at this Soundcloud account. I added the folder to Rapid Evolution, selected all tracks and then clicked on Detect >All. This will give us the Key and BPM as well. After the processing the software shows us. Ableton Live is one of several digital audio products that have genuinely transformed the music scene in the past few years. The software. MixPad Free Music Mixer.

We also intend to kickstart the online community around this toolkit and model it after other creative communities and toolkits (e.g., Processing, openFrameworks, Cinder++, etc.) eNTERFACE’17 participants will become this community’s power users and core members, and their resulting projects will be integraded as demonstrators for the toolkit. Work plan and Schedule Our work plan for July 3-7 is divided into one specific subset of the RAPID-MIX API each day, with the possibility for a mentored project work extension period. Sensor input, biosensors, training data 2. Signal processing and Feature Extraction 3. Machine Learning I: Classification and Regression 4. Machine Learning II: Temporal-based and gesture recognition 5. Multimodal data repositories and collaborative sound databases.

AGILE and the RAPID-API We are excited to announce that we are working with AGILE, a European Union Horizon 2020 Research Project developing and building a modular software-and-hardware gateway for the Internet of Things (IoT). We will be combining the RAPID-API with the modular hardware of the AGILE project. Their smart hardware provides a multitude of ways to interface with the real world through sensors, and the Rapid-API will give people ways to harness and use that real-world data.

You’ll be able to see some of the results of this collaboration at Adaptation, a media arts event organised by AGILE. Artists are invited to submit proposals to Adaptation for works which represent data through audiovisual experiences, and selected artists will work with technologists to realise their ideas. We will be working with AGILE to have the RAPID-API available to help this realisation. Find out more about Adaptation and how to get involved. Find out more about AGILE. Workshop in “Musical Gesture as Creative Interface” Conference On the past March 16th, Goldsmiths EAVI team Atau Tanaka and Francisco Bernardo delivered a workshop on “Interactive Applications in Machine Learning” to the attendees of the International Conference on “Musical Gesture as Creative Interface” in Porto.

About 15 participants engaged with a hands on approach to electromyography and machine learning for music making using RAPID-MIX tools BITalino and Wekinator, and Max for biosignal conditioning and signal sonification using several synthesis and mapping approaches. The workshop went all the way to a very successful end, with many of the participants achieving the full setup and engaging in joint experimentation with music making out of biosignals. The Wekinator, Ableton Live and the LOOP summit The Wekinator, a highly usable piece of software that helps you incorporate gestural interaction into artistic projects, got a nice mention on the Ableton blog. The post was about the recent Loop summit which was exploring the cutting edge of music tech, and it rightly identifies the potential for developing new musical instruments with the Wekinator. The post also mentions RAPID MIX partners and their. Read more here: The Wekinator is a core RAPID MIX technology, developed by team member Rebecca Fiebrink.

Behind the user friendly interface is a wealth of highly sophisticated machine learning technology that will be at the heart of many future RAPID MIX projects. If you’ve not used Wekinator for your gestural music or art, you should have a play with it. Make sure you’ve got the latest version of Wekinator, and keep us informed about what you’re doing with it.

We’d love to hear about any projects using the Wekinator with Ableton. You can also still sign up for the online course “Machine Learning for Musicians and Artists”, taught by Rebecca,. New Wekinator and Online Course in Machine Learning RAPID MIX team member Rebecca Fiebrink has launched a new version of the machine learning software,, an incredibly powerful yet user friendly toolkit for bringing expressive gestures into your music, art, making, and interaction design. This is a major new version that includes dynamic time warping alongside new classification and regression algorithms.

You can download it here (for mac/windows/linux) along with many new examples for connecting it to real-time music/animation/gaming/sensing environments:. The technology that drives Wekinator will feature in many RAPID MIX products that you’ll be hearing about in the future. The launch coincides the launch of a free online class run by Rebecca, Machine Learning for Musicians and Artists:. If you’re interested in machine learning for building real-time interactions, sign up! No prior machine learning knowledge or mathematical background is necessary. The course is probably most interesting for people who can already program in some environment (e.g., Processing, Max/MSP) but should still be accessible to people who don’t.

The course features two guest lecturers, music technology researcher Baptiste Caramiaux and composer/instrument builder/performer Laetitia Sonami. ROLI releases NOISE: a free app that turns the iPhone into an expressive musical instrument It’s certainly a day for exciting news at RAPID MIX!

RAPID MIX partners ROLI, builders of the beautiful Seaboard and owners of JUCE, have released an iPhone app that turns your phone into a truly expressive musical instrument. NOISE is the most full-bodied instrument to ever hit the glass surface of an iPhone. It turns the iPhone screen into a continuous sonic surface that responds to the subtlest gestures. Taking advantage of the new 3D Touch technology of the iPhone 6s, the surface lets music-makes shape sound through Strike, Press, Glide, Slide, and Lift – the “” that customers and critics have celebrated on ROLI’s award-winning. As an instrument NOISE features 25 keywaves, includes 25 sounds, and has five faders for fine-tuning the touch-responsiveness of the surface. Download Advertisement Video. It is for music-makers of any skill level who want to explore a that fits in their pockets. NOISE is also the ultimate portable sound engine for the Seaboard RISE and other MIDI controllers. Powered by and using MIDI over Bluetooth, NOISE lets music-makers control sounds wirelessly from their iPhones.

It is one of the first apps to enable Multidimensional Polyphonic Expression (MPE) through its MPE Mode, so the NOISE mobile sound engine works with any MPE-compatible controller. ROLI’s sound designers have crafted the app’s 25 preset sounds – which include Breath Flute and Extreme Loop Synth – especially for MPE expressivity. Additional sounds can be purchased in-app.

With a Seaboard RISE, a, and NOISE on their iPhones, music-makers now have a connected set of portable tools for making expressive music on the go. While it works with all models of iPhone from the iPhone 5 to the iPhone 6s, NOISE has been optimized to take full advantage of 3D Touch on the iPhone 6s. RAPID-MIX at the JUCE Summit RAPID-MIX team member and Goldsmiths reader in creative computing Mick Grierson was present at the JUCE summit on 19-20th November, alongside representatives from Google, Native Instruments, Cycling ’74 and other key music industry players. Mick is Innovation Manager for RAPID-MIX, running the whole project as well as being a key developer of MIX technologies.

Even if you haven’t heard of JUCE, chances are you’ve used it without knowing. It is an environment in which you can build plug-ins, music software and more, and is perhaps best known as the environment in which Cycling 74’s Max MSP is built and runs.

It’s ever present in the background of a lot of audio software. The summit brought together key technologists in the music industry, and it was a pleasure and an honour to have a RAPID MIX presence amongst them Mick spoke between David Zicarelli (from Cycling 74) and Andrew Bell (Cinder), presenting his C++ audio DSP engine Maximilian, describing how it integrates with JUCE, Cinder, Open Frameworks and other tools. Maximilian is an incredibly powerful, lightweight audio engine which will form a key part of the RAPID API we will be launching soon.

If you want to dig deeper, check out the Maximilian library on Github here: JUCE is now owned by RAPID-MIX partners ROLI, and we’re excited about future developments involving RAPID-MIX, Juce and the creative music industry partners present at the summit. Stay tuned for more news! Kurv Guitar Launch We are delighted to announce the launch of the Kickstarter for the Kurv guitar.

This is the first of many projects and products that are powered by RAPID MIX technologies. It’s the RAPID MIX gesture recognition and synthesis technologies, along with our own hardware design and bluetooth firmware, that make projects like the Kurv Guitar possible. We expect this to be the first of many musical interfaces, ranging from games to serious musical instruments, that help turn the human body into an expressive musical tool using RAPID MIX technologies.

Check out their Kickstarter below. Second Meeting at IRCAM, Paris The next RAPID MIX meeting will be at IRCAM in Paris from the 20th – 22nd May. This meeting is to plan and prepare for the workshops and user centred design sessions we will be doing before and during the Barcelona Hackday. We will be reviewing the different technologies that the partners can bring for people to work with, think about some early prototpyes and prepare strategies and challenges for the hackers. We’re excited about bringing these different technologies together and start putting them in the hands of artists, musicians, programmers and who are going to be using them. Barcelona Kickoff Meeting! We are in Barcelona at the Music Technology group, UPF for our long awaited kick off meeting for our new EC Horizon2020 funded ICT project, RAPID MIX.

This is an initial meeting for all the partners to meet each other, discuss the different work packages that constitute the project, and start to get inspired by sharing research, products and technologies. Nice to feel welcome with wayfinding! Sergi Jorda (Music Technology Group) reminds us of project objectives ReacTable Systems: Gunter Geiger Alba Rosado from MTG on Workpackage 1.

RAPID-MIX API integrates feature sets of all technologies sound-related into an incredibly sophisticated, simple to use, cross-platform audio engine. The syntax and program structure are based on the popular ‘Processing’ environment, will provide standard waveforms, envelopes, sample playback, resonant filters, and delay lines, equal power stereo, quadraphonic and 8-channel ambisonic support is included.

There’s also Granular synthesisers with Timestretching, FFTs and Music Information Retrieval. Diemo Schwarz Diemo Schwarz is researcher–developer in real-time applications of computers to music with the aim of improving musical interaction, notably sound analysis–synthesis, and interactive corpus-based concatenative synthesis. He holds a PhD in computer science applied to music developing of a new method of concatenative musical sound synthesis by unit selection from a large database. This work is continued in the CataRT application for real-time interactive corpus-based concatenative synthesis within Ircam’s Sound Music Movement Interaction team (ISMM). His current research comprises uses of tangible interfaces for multi-modal interaction, generative audio for video games, virtual and augmented reality, and the creative industries. As an artist, he composes for dance, video, and installations, and interprets and performs improvised electronic music with his solo project Mean Time Between Failure, in various duos and small ensembles, and as member of the 30-piece ONCEIM improvisers orchestra.

Amaury La Burthe Amaury holds a Msc from IRCAM. He first worked as assistant researcher for Sony-ComputerScienceLaboratory and then as lead audio designer for video game company Ubisoft in Montreal.

He founded in 2009 the start-up AudioGaming focused on creating innovative audio technologies. AudioGaming expended its activities in 2013 through its brand Novelab which is creating immersive and interactive experiences (video games, VR, installations,). Amaury recently worked on projects like Type:Rider with Arte and Kinoscope with Google as executive producer and Notes on Blindness VR as Creative director and Audio director.

Joseph Larralde Joseph Larralde is a programmer in IRCAM’s ISMM team. He’s also a composer / performer using new interfaces to play live electroacoustic music, focusing on the gestural expressiveness of sound synthesis control.

His role in the RAPID-MIX project is to develop a collection of prototypes that demonstrate combined uses of all the partners’ technologies, to bring existing machine-learning algorithms to the web, and more broadly to merge IRCAM’s software libraries together with the ones from UPF and Goldsmith in the RAPID-API. Panos Papiotis Panagiotis Papiotis received his B.Sc. In Computer Science from the Informatics Department of the Athens University in Economics and Business, Greece, in 2009, and the M.Sc. In Sound and Music Computing from the Dept. Of Information and Communication Technologies of the Universitat Pompeu Fabra in Barcelona, Spain in 2010. He is currently a Ph.D.

Student at the Music Technology Group in Universidad Pompeu Fabra where he is carrying out research on computational analysis of ensemble music performance as well as multimodal data acquisition and organisation. He has participated in the FP7 project SIEMPRE (FP7-250026). Adam Parkinson is a researcher, performer and curator based in the EAVI research group. He was artistic co-chair of the 2014 New Interfaces for Musical Expression conference, and has organised events at the Whitechapel Gallery alongside the long running EAVI nights in South East London. As a programmer and performer he has worked with artists including Arto Lindsay, Caroline Bergvall, Phill Niblock, Rhodri Davies and Kaffe Matthews, and has performed throughout Europe and North America. He is interested in new musical instruments and the discourses around them, and the cultural and critical spaces between academia and club culture.

Francisco Bernardo Francisco Bernardo is a researcher, an interactive media artist and a software designer. He holds a B.S. In Computer Science and Systems Engineering and a MSc. In Mobile Systems, both from University of Minho. He also holds an M.A. In Management of Creative Industries, from Portuguese Catholic University, with specialism in Creativity and Innovation in the Music Industry. He lectured at Portuguese Catholic University and worked in the software industry in R&D for Corporate TV, Interactive Digital Signage and Business Intelligence, developing video applications, complex user interface architectures, and interaction design for desktop, web, mobile and augmented reality applications. Currently, Francisco is a PhD candidate in Computer Science at Goldsmiths University of London, in the, focusing on new Human-Computer Interaction approaches to Music Technology.

Atau Tanaka is full Professor of Media Computing. He holds a doctorate from Stanford University, and has carried out research IRCAM, Centre Pompidou and Sony Computer Science Laboratories (CSL) in Paris. He was one of the first musicians to perform with the BioMuse biosignal interface in the 1990s. Tanaka has been Artistic Ambassador for Apple and was Director of Culture Lab at Newcastle University, where he was Co-I and Creative Industries lead in the Research Councils UK (RCUK) £12M Digital Economy hub, Social Inclusion through the Digital Economy (SiDE). He is recipient of an ERC StG for MetaGesture Music, a project applying machine learning techniques to gain deeper understanding of musical engagement. He leads the UK Engineering & Physical Sciences Research Council (EPSRC) funded Intelligent Games/Game Intelligence (IGGI) centre for doctoral training. Mick Grierson is Senior Lecturer and convenor of the Creative Computing Programme and Director of Goldsmiths Digital consultancy.

He has held Knowledge Transfer grants for collaboration with companies in the consumer BCI industry, the music industry and the games industry. In addition he works as a consultant to a wide range of SMEs and artists in areas of creative technologies. His software for audiovisual performance and interaction has been downloaded hundreds of thousands of times by VJs and DJs, and is used by high profile artists and professionals including a large number of media artists and application developers. Grierson was the main technology consultant for some of the most noteworthy gallery and museum installations since 2010 including Christian Marclay’s internationally acclaimed The Clock, which won the Golden Lion at the Venice Biennale; Heart n Soul’s Dean Rodney Singers (Part of the Paralympics Unlimited Festival 2012 at London Southbank), and London Science Museum’s From Oramics to Electronica. Rebecca Fiebrink is Lecturer in Computing Science. She works at the intersection of human-computer interaction, applied machine learning, and music composition and performance.

She convenes the course Perception and Multimedia Computing. Research interests include New technologies for music composition, performance, and scholarship; enabling people to apply machine learning more efficiently and effectively to real-world problems; supporting end-user design of interactive systems for health, entertainment, and the arts; creating new gesture- and sound-based interaction techniques; designing and studying technologies to support creative work and humanities scholarship; music information retrieval; human-computer interaction; computer music performance; education at the intersection of computer science and the arts. The RAPID-MIX consortium accelerates the production of the next generation of Multimodal Interactive eXpressive (MIX) technologies by producing hardware and software tools and putting them in the hands of users and makers. We have devoted years of research to the design and evaluation of embodied, implicit and wearable human-computer interfaces and are bringing cutting edge knowledge from three leading European research labs to a consortium of five creative companies. Methodologies We draw upon techniques from user-centred design to put the end users of our tools and products at centre stage.

Uniquely, the “users” in our project include both individuals and small companies, ranging from musicians to health professionals, hackers, programmers, game developers and more. We will share what we learn about bringing these varied users directly into the development process. We will also be developing methodologies for evaluating the success of our technologies in people’s lives and artistic practices, and share our experiences of these methods. RAPID-MIX API The RAPID-MIX API will be a comprehensive, easy to use toolkit that brings together different software elements necessary to integrate a whole range of novel sensor technologies into products, prototypes and performances. Users will have access to advanced machine learning algorithms that can transform masses of sensor data into expressive gestures that can be used for music or gaming.

A powerful but lightweight audio library provides easy to use tools for complex sound synthesis. Prototypes We need fast product design cycles to reduce the gap between laboratory-based academic research and everyday artistic or commercial use of these technologies, and rapid prototyping is one way of achieving this. Our api tools are designed to help produce agile prototypes that can then feed back into how we tweak our API to make it as usable, accessible and useful as possible. MIX Products We anticipate that our technologies will be used in a variety of products, ranging from expressive new musical instruments to next generation game controllers, interactive mobile apps and quantified self tools. We want to provide a universal toolkit for plugging the wide ranging expressive potentials of human bodies directly into digital technologies. RAPID-MIX is an Innovation Action funded by the European Commision (H2020-ICT-2014-1 Project ID 644862).

Release Notes for vMix 20 - 5 September 2017 Outputs • Two additional independent outputs for vMix Call, Replay and NDI (Pro and 4K editions only) • Second recorder with an independent recording format that can be assigned to one of the 4 outputs. Mr Photo 1.5 Setup Free Download. (Pro and 4K editions only) Production Clocks • New Dual Production Clock can now be enabled from Settings ->Options • Each of the clocks can display either the current time, recording duration, streaming duration or a countdown to an event • Clock display can also be added as an input which can be assigned to the MultiView Output • Clock input can also be enabled as an NDI source on the network, providing a mobile clock that can be displayed anywhere using the free NDI tools! VMix Call • Audio and Video sources sent to guests can now be changed independently at any time from the right click menu • All vMix editions including Basic HD can now connect to a remote vMix Call running HD or higher • New Low Latency option added. Release Notes for vMix 19 - 24 March 2017 vMix Call • Add up to 8 remote guests to vMix quickly and easily with HD video and high quality full duplex audio.

• vMix Call requires a copy of vMix HD (1 Guest), vMix 4K (2 Guests) or vMix Pro (8 Guests) • Each guest includes Automatic Mix minus for hassle free audio. Release Notes for vMix 18 - 29 November 2016 Feature Highlights • - link live data from Excel, CSV, Google Sheets, RSS, Text and XML to Titles within vMix • - activate lights, button LEDs and motorised faders on support MIDI and X-Keys controllers in response to changes in vMix. • - new dockable interface for the List input allows quick and easy control over multiple video and audio files in a single input.

• Instant Replay - new features added to Instant Replay include 8 Event tabs, up from four, 3 digit ID codes assigned to each event and the order events irrespective of timecode. • Magewell Pro Capture SDK - Full support added which enables lower latency and multi-channel audio support from Magewell Pro Capture series cards. • NDI - Reduced CPU usage across the board allows for more NDI inputs to be used at the same time. User Interface • Web Browser input now supports keyboard control through right click menu option. • Custom title templates recently added will now be displayed along with a thumbnail preview from the Add Input >Title >Recent tab. Release Notes for vMix 17 - 10 April 2016 Feature Highlights • NDI - Network Device Interface support. See the page for more information.

• PTZ support for Web Enabled Panasonic, Sony and PTZ Optics cameras. • Web Browser input. Easily add web pages as inputs including sites with video and audio content. User Interface • Categories now appear as tabs above the inputs row.

Labels can be added by right clicking any category button.